3.1 Dartmouth and Global Counterparts

The summer of 1956 at Dartmouth College marked a pivotal moment in computational history, but not for the reasons typically celebrated. While John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester coined the term "artificial intelligence" and launched what would become a global research enterprise, their bold prediction that "every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it" [Core Claim: McCarthy et al., Dartmouth Proposal, 1955] represented just one thread in a worldwide tapestry of efforts to create thinking machines.

The Global Context of Symbolic AI

The Dartmouth workshop occurred against a backdrop of parallel developments across continents. At Kyoto University, researchers led by Setsuo Ohsuga were pioneering pattern recognition systems that would later influence global AI development [Context Claim: Ohsuga, Pattern Recognition Society of Japan, 1962]. European institutions, building on their wartime cryptographic expertise, pursued logic-based approaches to machine reasoning. The Soviet Union's cybernetics programs, despite ideological constraints, contributed mathematical frameworks for adaptive systems that would prove foundational decades later [Context Claim: Gerovitch, From Newspeak to Cyberspeak, 2002].

What emerged from this global ferment was not a single approach to artificial intelligence, but a constellation of methods united by a common belief: that intelligence could be captured through symbol manipulation and logical reasoning. The systems that resulted were indeed impressive—and revealingly narrow.

Achievements of the Symbolic Era

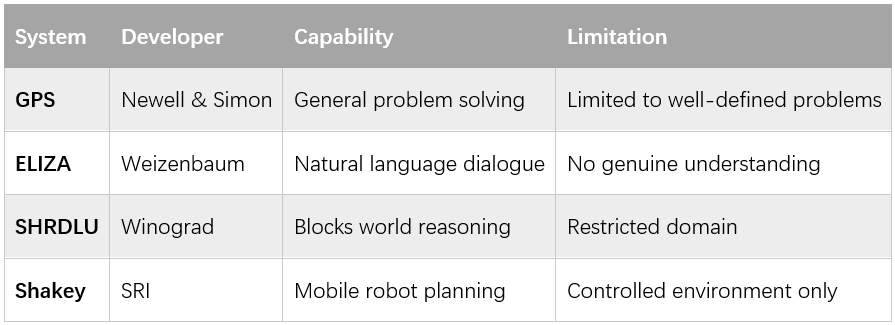

The General Problem Solver (GPS), developed by Allen Newell and Herbert Simon between 1957 and 1959, demonstrated that machines could tackle diverse problems using unified principles [Core Claim: Newell & Simon, Human Problem Solving, 1972]. GPS successfully solved the Tower of Hanoi puzzle, missionaries and cannibals problems, and simple theorem-proving tasks by breaking complex problems into manageable subgoals.

Joseph Weizenbaum's ELIZA (1964-1966) created perhaps the era's most memorable demonstration of machine intelligence [Core Claim: Weizenbaum, Communications of the ACM, 1966]. By simulating a Rogerian psychotherapist through pattern matching and response templates, ELIZA convinced many users—including sophisticated computer scientists—that they were conversing with a genuinely understanding system. Yet Weizenbaum himself emphasized that ELIZA possessed no real comprehension, merely clever linguistic manipulation.

The Stanford Research Institute's Shakey robot (1966-1972) represented the era's most ambitious integration of AI capabilities [Core Claim: Nilsson, Shakey the Robot, 1984]. This mobile system combined computer vision, natural language processing, and automated planning to navigate simple environments and manipulate objects. Shakey could interpret commands like "Push the box off the platform" by constructing plans that involved multiple intermediate steps.

3.2 The Search Paradigm and Its Limits

The symbolic era's fundamental insight was that intelligence could be modeled as search through problem spaces [Interpretive Claim]. Every puzzle, theorem, or planning task could be represented as finding a path from an initial state to a goal state through a space of possible moves. This framework unified diverse AI applications under a single computational paradigm.

The Power of Systematic Search

The A* algorithm, developed by Peter Hart, Nils Nilsson, and Bertram Raphael in 1968, provided an optimal method for such searches [Core Claim: Hart et al., IEEE Transactions on Systems Science and Cybernetics, 1968]. A* combined the distance already traveled with an estimate of remaining distance to the goal, guaranteeing optimal solutions while exploring minimal unnecessary states. This algorithm became fundamental to AI systems and remains crucial today for applications from GPS navigation to video game AI.

Chess exemplified both the promise and limitations of search-based approaches. While Claude Shannon had outlined the basic minimax strategy in 1950, actually implementing competitive chess programs proved extraordinarily challenging [Core Claim: Shannon, Philosophical Magazine, 1950]. The game's complexity—approximately 10^120 possible games—meant that exhaustive search was impossible even with rapidly improving computers.

The Combinatorial Explosion

As problems grew larger, the number of possible states increased exponentially, making brute-force search computationally intractable. Chess programs of the late 1960s could examine perhaps three moves ahead before running out of time or memory. This forced AI researchers to develop heuristics—rules of thumb that guided search toward promising areas while pruning unlikely possibilities.

These heuristics worked well within specific domains but failed to generalize across different problem types. A chess evaluation function that prioritized piece safety proved useless for theorem proving or trip planning. This domain specificity revealed a fundamental limitation: while search could solve well-defined problems efficiently, it couldn't capture the flexible, adaptive intelligence that humans display across diverse contexts [Interpretive Claim].

3.3 The Perceptron Controversy Revisited

Frank Rosenblatt's perceptron, introduced in 1957, offered a radically different approach to machine intelligence [Core Claim: Rosenblatt, Psychological Review, 1958]. Instead of programming explicit rules for reasoning, perceptrons learned from examples, adjusting connection weights based on training data to recognize patterns in visual or numerical inputs.

Early Promise and Media Hype

Initial demonstrations were genuinely impressive. The Mark I Perceptron, a room-sized machine built at Cornell University, learned to distinguish squares from circles, recognize simple letters, and identify weather patterns in photographs [Core Claim: Rosenblatt, Principles of Neurodynamics, 1962]. The media seized on these achievements with characteristic enthusiasm. The New York Times proclaimed that perceptrons would soon "walk, talk, see, write, reproduce themselves and be conscious of their existence."

Such claims proved premature. In their 1969 book "Perceptrons," Marvin Minsky and Seymour Papert provided a rigorous mathematical analysis that exposed severe limitations of single-layer networks [Core Claim: Minsky & Papert, Perceptrons, 1969]. Most critically, perceptrons could not learn the XOR function—a simple logical operation where output is true when exactly one input is true. This limitation, called linear separability, meant perceptrons could only classify patterns separable by a straight line.

The Debate's Lasting Impact

The Minsky-Papert critique was mathematically correct but historically controversial. While they acknowledged that multi-layer networks might overcome these limitations, they argued that no effective learning algorithms existed for training such networks. This assessment discouraged neural network research for nearly two decades, even though solutions would eventually emerge.

Contemporary analysis suggests the critique served both positive and negative functions [Interpretive Claim]. It provided necessary mathematical rigor in a field prone to overstatement, but it also may have delayed important developments by discouraging exploration of multilayer architectures. The debate illustrates the tension between mathematical precision and innovative exploration that continues to characterize AI research.

3.4 Legacy and Lessons

What Symbolic AI Achieved

Despite its ultimate limitations, the symbolic era established AI as a legitimate scientific discipline and created tools that remain valuable today. Logical reasoning systems enable automated theorem proving and software verification. Planning algorithms guide robotics and logistics systems. Natural language processing techniques evolved from early parsing systems pioneered in programs like SHRDLU.

The era's theoretical contributions proved equally important. The concept of heuristic search, formal knowledge representation, and computational problem-solving established frameworks that continue to influence AI research. Modern neurosymbolic approaches attempt to combine symbolic reasoning's precision with neural networks' learning capabilities, acknowledging that both paradigms offer complementary strengths [Context Claim: Garcez et al., Neurosymbolic AI, 2021].

Why Symbol Manipulation Alone Couldn't Suffice

The fundamental challenge facing symbolic AI was the brittleness of hand-coded knowledge. Systems that performed brilliantly within their designed domains failed catastrophically when encountering novel situations. ELIZA could simulate therapeutic dialogue but couldn't genuinely understand patient concerns. Shakey navigated laboratory environments but couldn't adapt to real-world complexity.

This brittleness reflected a deeper problem: human intelligence appears to depend on massive amounts of implicit knowledge about how the world works [Interpretive Claim]. Capturing this common-sense understanding through explicit rules proved far more difficult than early researchers anticipated. The knowledge required for seemingly simple tasks—understanding that dropped objects fall, that people have motivations, that physical constraints limit possible actions—resisted formalization.

The First AI Winter's Broader Lessons

The funding reductions and institutional disappointments of the mid-1970s taught the AI community crucial lessons about managing expectations and the importance of empirical validation. Military sponsors, having invested heavily based on optimistic projections, redirected resources when promised capabilities failed to materialize [Context Claim: Crevier, AI: The Tumultuous History, 1993].

Yet this apparent setback prepared the foundation for future breakthroughs. The limitations of symbolic reasoning motivated the statistical approaches that would dominate the next era. The recognition that intelligence requires learning, not just clever programming, directed attention toward machine learning methods. Even the perceptron controversy, by clarifying the mathematical requirements for neural learning, set the stage for the backpropagation algorithm that would eventually enable deep learning.

The symbolic era thus reveals AI's characteristic pattern: each generation's limitations become the next generation's research priorities [Interpretive Claim]. Understanding this cycle helps explain why AI progress appears non-linear, with periods of rapid advancement followed by consolidation and redirection rather than smooth, incremental improvement.

ns216.73.216.94da2